Groq’s $20,000 LPU chip breaks AI performance records to rival GPU-led industry

Groq’s LPU Inference Engine, a devoted Language Processing Unit, has put a new characterize in processing effectivity for big language devices.

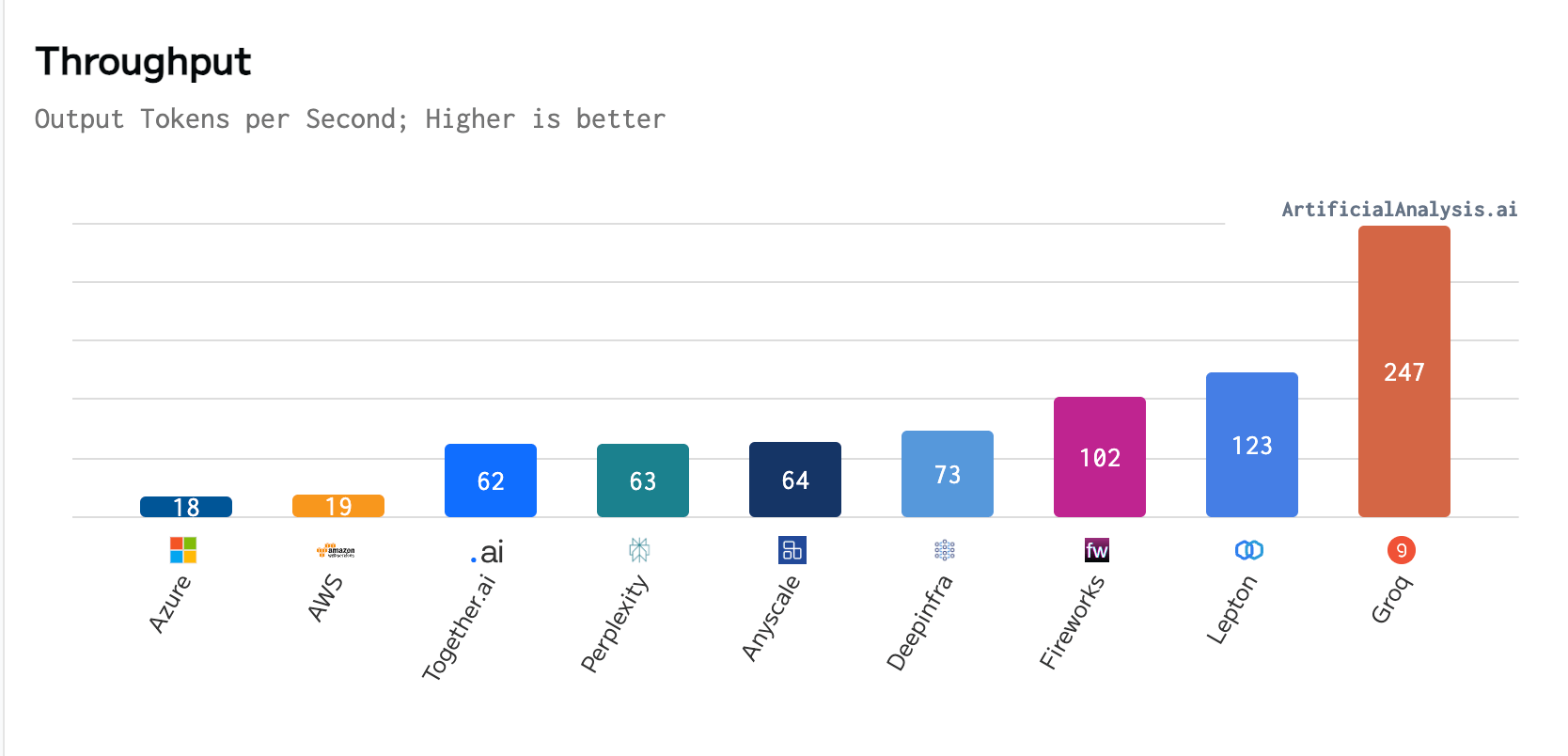

In a most modern benchmark performed by ArtificialAnalysis.ai, Groq outperformed eight other contributors across several key performance indicators, including latency vs. throughput and total response time. Groq’s internet put of dwelling states that the LPU’s distinctive performance, in particular with Meta AI’s Llama 2-70b model, intended “axes had to be prolonged to arrangement Groq on the latency vs. throughput chart.”

Per ArtificialAnalysis.ai, the Groq LPU performed a throughput of 241 tokens per 2d, vastly surpassing the capabilities of different internet hosting suppliers. This degree of performance is double the velocity of competing solutions and potentially opens up new possibilities for big language devices across varied domains. Groq’s inner benchmarks extra emphasized this achievement, claiming to attain 300 tokens per 2d, a velocity that legacy solutions and incumbent suppliers possess yet to advance near.

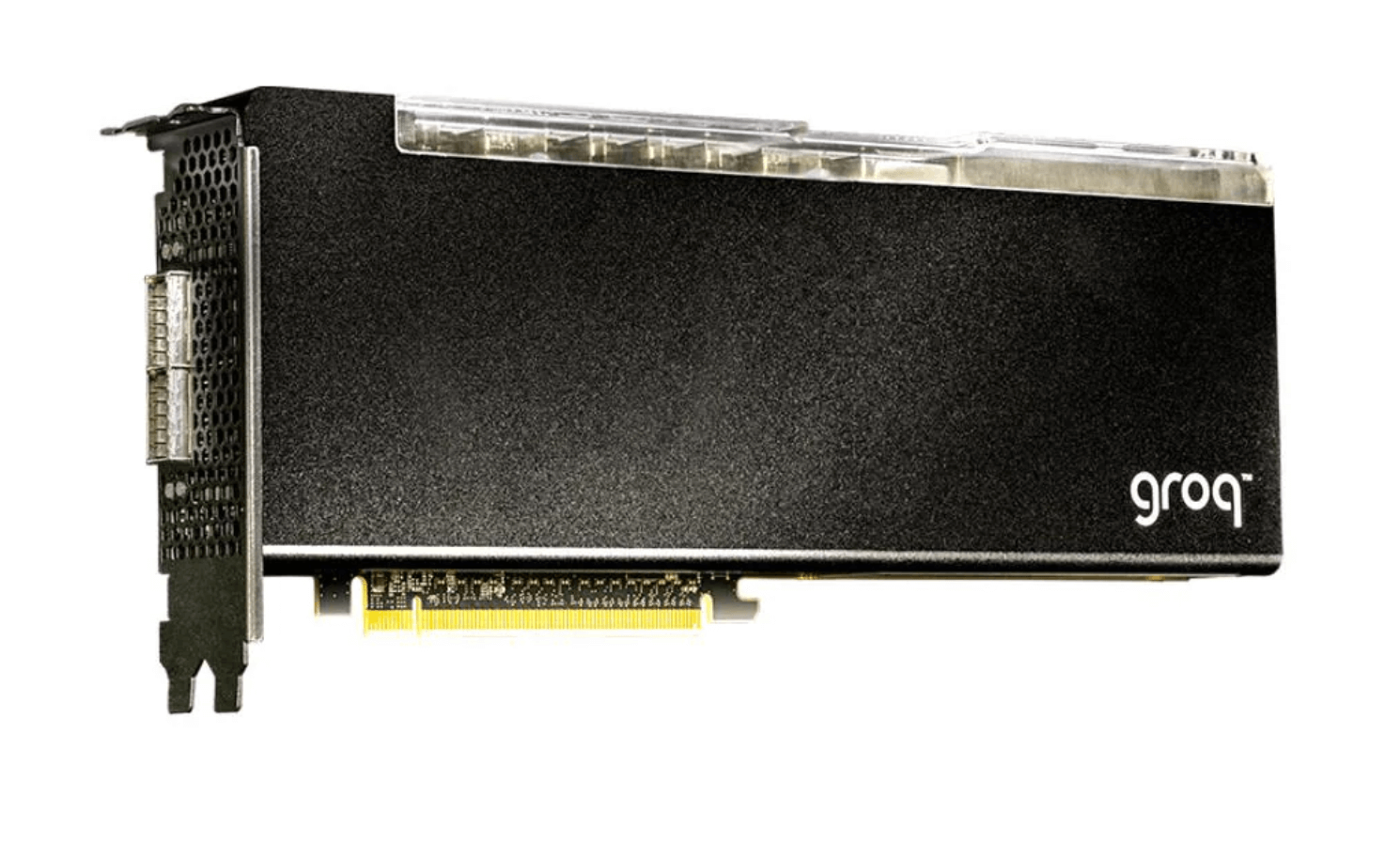

The GroqCard™ Accelerator, priced at $19,948 and available to buyers, lies on the center of this innovation. Technically, it boasts up to 750 TOPs (INT8) and 188 TFLOPs (FP16 @900 MHz) in performance, alongside 230 MB SRAM per chip and up to 80 TB/s on-die memory bandwidth, outperforming worn CPU and GPU setups, particularly in LLM tasks. This performance jump is attributed to the LPU’s capacity to vastly cut computation time per discover and alleviate exterior memory bottlenecks, thereby enabling sooner text sequence era.

Evaluating the Groq LPU card to NVIDIA’s flagship A100 GPU of connected stamp, the Groq card is superior in tasks where velocity and effectivity in processing enormous volumes of simpler records (INT8) are excessive, even when the A100 makes utilize of superior tactics to enhance its performance. Nonetheless, when going thru more advanced records processing tasks (FP16), which require increased precision, the Groq LPU doesn’t attain the performance stages of the A100.

In actuality, both components excel in varied aspects of AI and ML computations, with the Groq LPU card being exceptionally aggressive in running LLMS at velocity whereas the A100 leads in varied places. Groq is positioning the LPU as a instrument for running LLMs in preference to raw compute or good-tuning devices.

Querying Groq’s Mixtral 8x7b model on its internet put of dwelling resulted in the next response, which became once processed at 420 tokens per 2d;

“Groq is a extremely efficient instrument for running machine finding out devices, in particular in production environments. Whereas it would possibly possibly possibly per chance per chance also honest no longer be the utterly different for model tuning or practising, it excels at executing pre-expert devices with high performance and low latency.”

A inform comparison of memory bandwidth is less straightforward because of the Groq LPU’s focal point on on-die memory bandwidth, vastly benefiting AI workloads by cutting back latency and extending records transfer rates inner the chip.

Evolution of computer components for AI and machine finding out

The introduction of the Language Processing Unit by Groq in most cases is a milestone in the evolution of computing hardware. Ragged PC components—CPU, GPU, HDD, and RAM—possess remained somewhat unchanged of their frequent invent for the reason that introduction of GPUs as certain from built-in graphics. The LPU introduces a specialized contrivance focused on optimizing the processing capabilities of LLMs, which can per chance per chance change into an increasing number of advantageous to bustle on local devices. Whereas companies and products delight in ChatGPT and Gemini bustle thru cloud API companies and products, the advantages of onboard LLM processing for privacy, effectivity, and security are limitless.

GPUs, on the muse designed to dump and velocity up 3D graphics rendering, possess change accurate into a excessive aspect in processing parallel tasks, making them necessary in gaming and scientific computing. Over time, the GPU’s role expanded into AI and machine finding out, courtesy of its capacity to influence concurrent operations. With out reference to those advancements, the elemental structure of those components essentially stayed the identical, specializing in general-operate computing tasks and graphics rendering.

The creation of Groq’s LPU Inference Engine represents a paradigm shift particularly engineered to handle the uncommon challenges offered by LLMs. No longer like CPUs and GPUs, which can per chance per chance very well be designed for a expansive differ of purposes, the LPU is tailored for the computationally intensive and sequential nature of language processing tasks. This focal point enables the LPU to surpass the barriers of worn computing hardware when going thru the explicit requires of AI language purposes.

One of essentially the predominant differentiators of the LPU is its superior compute density and memory bandwidth. The LPU’s influence permits it to job text sequences powerful sooner, essentially by cutting back the time per discover calculation and taking out exterior memory bottlenecks. Right here is a excessive help for LLM purposes, where quick producing text sequences is paramount.

No longer like worn setups where CPUs and GPUs rely on exterior RAM for memory, on-die memory is built-in straight into the chip itself, offering vastly diminished latency and increased bandwidth for records transfer. This structure enables for like a flash get entry to to records, needed for the processing effectivity of AI workloads, by taking out the time-fascinating trips records must create between the processor and separate memory modules. The Groq LPU’s spectacular on-die memory bandwidth of up to 80 TB/s showcases its capacity to handle the expansive records necessities of enormous language devices more successfully than GPUs, which can per chance per chance boast high off-chip memory bandwidth however can no longer match the velocity and effectivity equipped by the on-die contrivance.

Establishing a processor designed for LLMs addresses a increasing need inner the AI analysis and development community for more specialized hardware solutions. This transfer would possibly possibly per chance potentially catalyze a new wave of innovation in AI hardware, main to more specialized processing items tailored to varied aspects of AI and machine finding out workloads.

As computing continues to conform, the introduction of the LPU alongside worn components delight in CPUs and GPUs indicators a new segment in hardware development—one that’s an increasing number of specialized and optimized for the explicit requires of superior AI purposes.

Source credit : cryptoslate.com